Objective Structured Clinical Examinations (OSCEs) are widely used in health professions education as the gold standard for evaluating clinical and communication skills. They combine structured scenarios, standardized patients, and observation checklists to test clinical reasoning, communication, and technical skills under controlled conditions. OSCEs are not only about assessing knowledge and skills; they also lay the groundwork for the next step in competency-based medical education: Entrustable Professional Activities (EPAs). While OSCEs are praised for their objectivity, trainers often face challenges in managing logistics, faculty time, and documentation. This blog explores how trainers can design and run video-based OSCEs with structured digital tools like Videolab to help overcome technical challenges.

Why are OSCEs Complex to Implement?

OSCEs are designed to simulate real clinical encounters, but several layers of complexity are inherent in their execution:

- Station design: Each station must have clear objectives, standardized scripts, and scoring rubrics aligned with competencies.

- Standardized patient variability: Even trained actors can introduce subtle differences that affect learner performance.

- Assessor calibration: Multiple examiners may interpret the same behavior differently, threatening reliability.

- Time constraints: Stations typically run in 8–12 minute blocks, creating pressure to observe, judge, and score within a short window.

- Data management: Scores need to be recorded, validated, and linked back to learner portfolios, often across multiple evaluators and locations.

Technical Considerations in OSCE Design

Station Blueprinting

Trainers should map OSCE stations to the curriculum’s learning outcomes and competency framework. This blueprinting should ensure balance between clinical reasoning, procedural skills, communication, and professionalism.

Example (Interprofessional Collaboration)

Competency Focus: Ability to work effectively within a multidisciplinary team, demonstrating respect, clear communication, and shared decision-making to ensure patient-centered care.

Learner Level: Senior medical students; early postgraduate trainees

Format: Role-play with standardized colleagues (nurse and physiotherapist)

Scenario: “You are the junior doctor on the surgical ward. Your patient is ready for discharge. You must coordinate with the nurse and physiotherapist to create a discharge plan that balances safety, mobility, and family concerns. You have 7 minutes to lead this discussion.”

Duration: 10 minutes (7 minutes interaction, 3 minutes debrief)

Setting: Hospital ward or clinic room

Scenario Script for Standardized Colleagues

- Nurse: Prioritizes patient safety and correct medication use at home. Expresses concern that the patient’s family may not understand discharge instructions

- Physiotherapist: Focuses on patient’s mobility and adherence to exercise regimen. Expresses frustration if ignored

- Optional escalation: If learner dominates discussion or dismisses input, colleagues become more assertive to test conflict resolution.

Scoring Rubrics

Rubrics can be analytic (checklist-based) or global rating scales. Analytic rubrics increase objectivity but risk over-fragmentation. Global rating scales capture professional judgment but require calibration. A hybrid approach is common.

Example (Interprofessional Collaboration)

Analytic Checklist (Yes = 1, No = 0)

Communication behaviors

Introduces self and clarifies team roles.

Acknowledges contributions from each team member.

Summarizes or paraphrases team input to confirm understanding.

Uses open-ended questions to elicit concerns.

Collaboration behaviors

- Addresses conflicting priorities respectfully (e.g., nurse’s safety vs physiotherapist’s mobility).

- Suggests or negotiates a plan integrating multiple viewpoints.

- References patient/family concerns explicitly.

Professional behaviors

- Maintains respectful tone, body language, and nonverbal cues.

Global Rating Scale (1–5)

1 – Unsatisfactory: Ignores input, dominates discussion, no patient-centered perspective.

2 – Borderline: Acknowledges input superficially, limited integration, inconsistent communication.

3 – Competent: Respectful communication, integrates at least two perspectives into plan.

4 – Good: Actively facilitates discussion, balances perspectives, patient/family considered.

5 – Excellent: Demonstrates exemplary teamwork; synthesizes all viewpoints into a coherent, patient-centered plan.

Reliability and Validity

-

Inter-rater reliability in video-based OSCEs is improved when multiple evaluators independently review performance, ideally on recorded material.

-

Content validity depends on the ability of the station blueprint to cover the intended competencies.

-

Construct validity grows when OSCE results align with performance in other assessments such as EPAs (triangulation).

Feedback Integration

After completing the station, learners should receive both quantitative scores (checklist and global rating scale) and qualitative feedback. Narrative comments from examiners should highlight specific behaviors, using examples from the interaction. For instance: “You summarized the physiotherapist’s point well but then moved directly to proposing a plan without inviting the nurse to share concerns.” This level of detail grounds feedback in observable actions rather than vague impressions.

A crucial step is self-reflection. Learners can review their recorded performance and compare their own observations with examiner comments. Structured reflection prompts (such as identifying two strengths and one area for improvement) encourage metacognitive awareness. Peer feedback can also be layered in, allowing learners to benchmark themselves against colleagues.

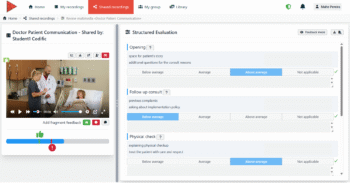

Step-by-Step Guide to Video-Based OSCEs on Videolab

-

Define competencies and station design. Trainers begin by aligning OSCE stations with the program’s competency framework. For example, a station may focus on breaking bad news to a patient, drawing from communication models like SPIKES.

-

Prepare evaluation rubrics. Load structured checklists into Videolab. This ensures each evaluator uses the same tool, reducing variability.

-

Set up secure recording. Use the Videolab Recorder App on tablets or the CloudControl system for multi-camera labs.

-

Conduct the OSCE. Learners complete stations while evaluators either observe live or review later. Asynchronous review improves efficiency and provides faculty with the flexibility to review the video multiple times for more comprehensive feedback.

-

Feedback and reflection. Trainers provide annotated feedback, while learners engage in self-reflection by reviewing their own recordings. This combination strengthens metacognition.

-

Data integration. Results are exported into institutional systems, providing a transparent record for accreditation and learner tracking.

Benefits of Video-based OSCEs

-

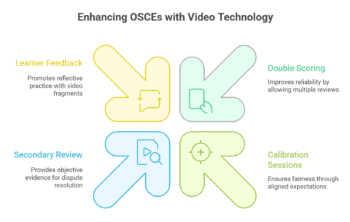

- Double scoring for reliability analysis: Video-based OSCEs enhance both the reliability of assessment and the educational value for learners. Traditionally, examiners must observe and score performances in real time, which can lead to rushed judgments, missed behaviors, or inter-rater variability. With video, assessors can revisit performances at their own pace, pause or replay critical moments, and provide more accurate scoring.

- Calibration and Training: Faculty can score recorded examples together to align expectations and reduce bias. Such calibration sessions are essential for ensuring fairness, especially when OSCEs involve multiple stations and large numbers of examiners.

- Secondary review for borderline cases: When appeals or disputes arise, recordings offer objective evidence of performance, reducing ambiguity and protecting both learners and institutions.

- Learner feedback with annotated video fragments: Watching their own performance promotes reflective practice, helping them identify strengths and areas for growth. Trainers can provide fragment-specific feedback by linking comments to precise video moments, which learners can then review multiple times.

Conclusion

OSCEs remain one of the most trusted tools in health professions education, but their technical complexity demands careful design and execution. By developing clear station blueprints, using hybrid rubrics, and incorporating structured feedback, trainers can improve both fairness and educational value. The integration of video recording adds another dimension of reliability, allowing for examiner calibration, transparent appeals, and richer learner reflection.

When OSCEs are combined with EPAs, they no longer stand as isolated assessments but instead form part of a continuum from simulated scenarios to authentic clinical responsibility. For trainers, this means moving beyond one-off examinations and building a defensible, evidence-based assessment strategy that prepares learners for the realities of modern healthcare practice.